Tony Alves, SVP of Product Management, and Joshua Routh, Product Director, recently attended the Researcher to Reader (R2R) Conference at the historic BMA House in London. Designed to bring together researchers, publishers, librarians, and other professionals in the scholarly information industry, the conference fosters dialogue on the evolving landscape of research communication. The general sessions covered a wide range of pressing topics, from research integrity and peer review innovations to open science and metadata standardization. However, the heart of the conference lies in its workshops—interactive sessions that allow participants to deeply engage with specific challenges and collaborate on shared visions and practical solutions. This is the second of four blog posts exploring key discussions and takeaways from the R2R 2025 conference, highlighting insights that are shaping the future of scholarly publishing.

Session: The Future of Research Integrity

The growing crisis of research integrity was a major theme at this year’s Researcher to Reader (R2R) Conference, with a dedicated session led by Nicko Goncharoff and Dr. Elliott Lumb (Signals). This session explored the systemic issues fueling research misconduct, the evolving role of investigative sleuths, and the technological advancements being deployed to counteract fraudulent practices in scholarly publishing.

The Persistent Problem of Research Fraud

Research misconduct has existed for decades, but its scale and sophistication have dramatically increased with modern technological advancements. The session traced some of the most infamous cases in scientific fraud, including:

These historical cases were used to illustrate how the same structural pressures—publish-or-perish culture, competition for grants, and the need for career advancement—continue to drive researchers toward questionable practices today.

Modern examples of large-scale research fraud include:

Speakers emphasized that the rise of Generative AI tools has made it easier than ever to fabricate research papers, datasets, and even entire peer reviews. This enables fraudulent researchers to evade detection and manipulate academic publishing at an unprecedented scale.

Strengthening Research Integrity

The session highlighted several ongoing efforts to combat research fraud, including:

- AI-driven tools for detecting misconduct:

- Publisher initiatives:

- The STM Integrity Hub, which enables publishers to collaborate in identifying fraudulent submissions across multiple journals.

- Dedicated research integrity teams being formed within major publishers to review flagged submissions and investigate anomalies.

- Independent research sleuths:

- Scholars like Elisabeth Bik, who has personally flagged over 7,000 problematic articles.

- Other sleuths such as Sholto David and Nick Wise, who have led to thousands of retractions.

Despite these efforts, panelists argued that the response from academic institutions has been slow and inconsistent. Many institutions remain reluctant to take decisive action due to concerns over reputation damage. Additionally, sleuths and whistleblowers often face harassment and professional risks for exposing fraudulent research.

Proposed solutions included:

- Formally recognizing research sleuths with institutional backing and academic rewards.

- Enhancing education on research integrity through university training programs.

- Reforming academic incentives to prioritize research quality over quantity.

Session: Trust Indicators for Research Integrity

Following the research integrity discussion, Dr. Heather Staines (Delta Think) moderated a session on trust indicators, featuring experts from STM Solutions, ORCID, and Cambridge University Press. The focus of this session was on how standardized metadata, retraction notices, and research provenance tracking can help mitigate misinformation and promote transparency in scholarly publishing.

Standardizing Retractions and Corrections

Jennifer Wright (Cambridge University Press) introduced CREC (Communication of Retractions, Removals, and Expressions of Concern), a newly published NISO standard (RP-45-2024) designed to:

- Standardize retraction notices, ensuring uniform placement within articles so that readers and machines can easily identify retracted content.

- Require metadata inclusion for retractions, making them searchable across indexing platforms.

- Establish metadata transfer protocols, enabling automated retraction updates across repositories and aggregators.

CREC aims to resolve the current inconsistencies where retractions often go unnoticed, as publishers and repositories lack a unified approach for displaying correction notices.

Integrating Trust Indicators into the Scholarly Workflow

Dr. Hylke Koers (STM Solutions) introduced two major initiatives designed to integrate retraction and trust indicators into the research lifecycle:

- GetFTR (Get Full Text Research):

- Provides one-click access to full-text PDFs.

- Now includes real-time retraction warnings at the point of discovery, helping researchers avoid citing unreliable studies.

- CUSAP (Content-update Signaling & Alerting Protocol):

- Pushes automated retraction notifications to repositories, indexing databases, and reference managers.

- Addresses the gap where 80% of indexing services currently lack a retraction alert mechanism.

Tom Demeranville (ORCID) discussed ORCID’s role as a researcher identity hub, providing verified academic records. ORCID trust markers include:

- Publication history validated by institutions.

- Peer review attribution validated by journals

- Employment and funding records.

- DOI-linking capabilities to ensure research provenance.

However, a key challenge remains: ORCID does not incorporate negative indicators like retractions, as researchers control their own profiles. This highlights a trade-off between researcher autonomy and institutional oversight.

Connecting Research Integrity and Trust Indicators

The sessions on research integrity and trust indicators reinforced the idea that research misconduct and misinformation are two sides of the same coin. The rapid increase in retractions demands better mechanisms to detect, communicate, and prevent fraudulent research. Key parallels between the sessions include:

- The need for systematic fraud detection: Research integrity tools like AI-powered screening (ImageTwin, Clear Skies, STM Integrity Hub) must integrate with trust indicators like ORCID, CREC, and CUSAP to ensure a multi-layered defense against misinformation.

- Proactive vs. reactive approaches: While research integrity efforts focus on identifying fraud before publication, trust indicators help mitigate damage after publication. A holistic approach requires a balance between preventative AI-driven fraud detection and standardized post-publication transparency.

- Institutional accountability: Both sessions underscored that institutions play a crucial role in research integrity and must adopt clear policies to support transparency. Just as researchers should not rely solely on publishers for fraud detection, trust indicator adoption must extend beyond journals to funders, universities, and data repositories.

- Incentivizing ethical research practices: The academic reward system must shift from emphasizing publication quantity to prioritizing research quality and transparency. Integrating trust markers into academic evaluations and funding decisions can create positive incentives for integrity.

Together, these insights emphasize that ensuring research trustworthiness requires a unified effort across publishers, institutions, and funders. Without a coordinated approach, misinformation and fraudulent research will continue to slip through the cracks.

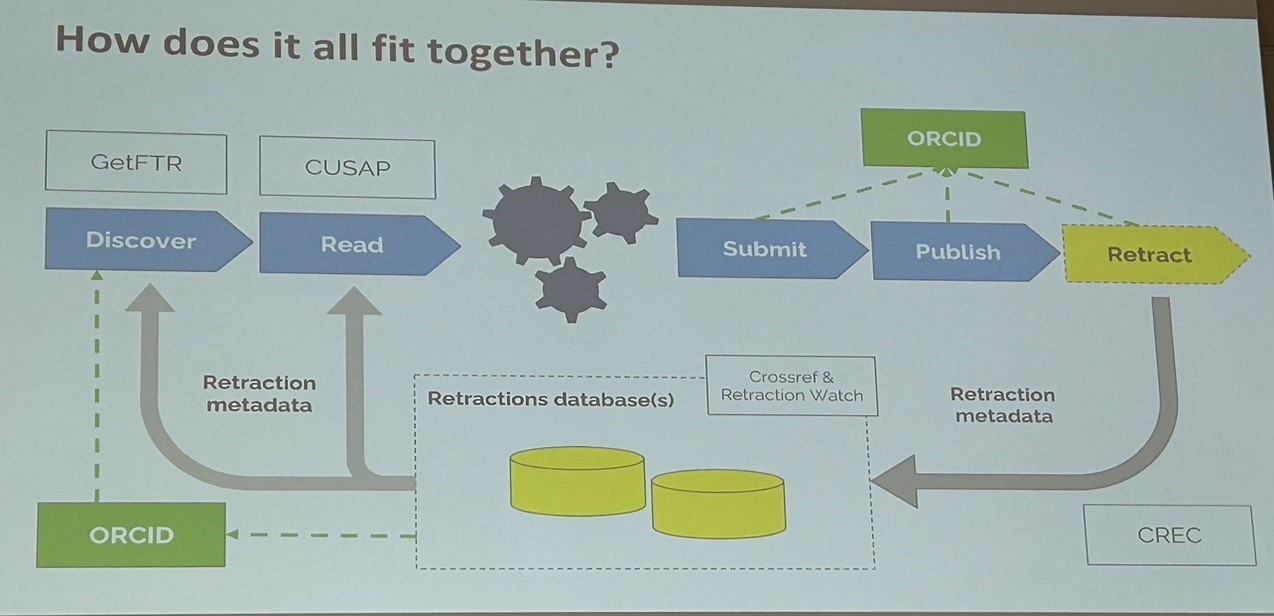

How It All Fits Together

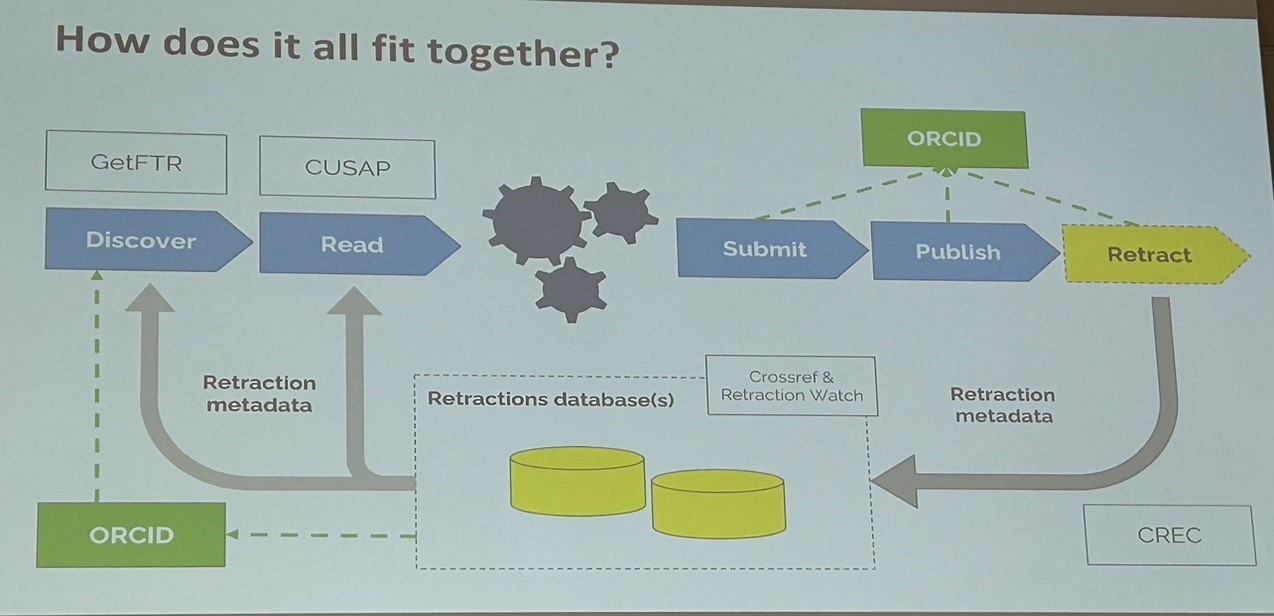

This image provides a visual representation of how different trust and retraction-tracking initiatives fit together in the scholarly publishing ecosystem. It illustrates the workflow from discovery to retraction, highlighting the roles of GetFTR, CUSAP, ORCID, Crossref, Retraction Watch, and CREC in ensuring that retractions and corrections are properly communicated to researchers.

Key Components in the Diagram:

- Discovery & Reading Phase:

-

- GetFTR (Get Full Text Research) and CUSAP (Content-update Signaling & Alerting Protocol) are positioned in the “Discover” and “Read” phases.

- GetFTR facilitates one-click access to full-text PDFs, while CUSAP is responsible for pushing retraction notifications to aggregators, repositories, and databases.

- Retraction metadata from retractions databases (e.g., Crossref and Retraction Watch) is fed into these discovery systems.

- Publishing & Retraction Process:

-

- The “Submit” and “Publish” phases represent the traditional academic workflow, where researchers submit manuscripts and publishers validate and publish content.

- ORCID plays a role here by tracking researcher identities, affiliations, and trust markers.

- If a paper is retracted, the “Retract” phase kicks in, feeding retraction metadata into systems like CREC (Communication of Retractions, Removals, and Expressions of Concern), which standardizes the display and metadata for retractions.

- Retraction Metadata Flow:

-

- Retractions are logged in databases like Crossref & Retraction Watch, ensuring that corrections, retractions, and expressions of concern are widely disseminated.

- This metadata is fed back into the discovery and reading phase, ensuring that researchers accessing the paper are alerted about its retraction.

How This Relates to the Trust Indicators Session:

- Jennifer Wright’s presentation on CREC aligns with the retraction metadata standardization step, ensuring consistent communication across platforms.

- Dr. Hylke Koers’ discussion on CUSAP is reflected in the push-based alert system seen here, making sure platforms receive real-time retraction updates.

- Tom Demeranville’s insights on ORCID fit into the Submit, Publish, and Retract sections, emphasizing that ORCID does not enforce value judgments but provides identity and provenance tracking.

Overall Takeaway:

This diagram shows a collaborative system where metadata on retractions and trust indicators is shared across multiple platforms, allowing researchers, institutions, and publishers to access up-to-date integrity signals. It eliminates the need for researchers to manually check multiple sources for retraction information, thus improving transparency and trust in the scholarly record.

Towards a More Transparent Research Ecosystem

The Researcher to Reader Conference 2025 underscored the urgent need to address research integrity threats proactively. While tools like CREC, CUSAP, GetFTR, and ORCID trust markers offer solutions, their impact will be limited unless they are widely adopted.

To move forward, scholarly stakeholders must commit to:

- Enforcing transparency in retraction practices to prevent misinformation.

- Shifting research incentives to prioritize quality over quantity.

- Embedding trust indicators directly into research workflows to ensure widespread accessibility.

As research misconduct evolves, the key question remains: Can the scholarly community act swiftly enough to safeguard the credibility of academic publishing?

– By Tony Alves